#Blocked: 3D Scanning Meets Robotic Manipulation

A Sawyer, a Turtlebot3, and a RealSense camera walk into a bar...

Overview:

For our final project in ME495, our team decided we wanted to take a 3D scan of an object and import it into Minecraft. Our solution was to use the Turtlebot3 as a turntable while the RealSense camera (mounted onto the Sawyer arm) would move around the Turtlebot3 and save the necessary views as pointclouds.

The captured pointclouds would then be fused together to make a multi-faced (5 by default) pointcloud object which would be saved as a pointcloud data file (pcd), converted to a ply file, and then imported into Minecraft.

The result is an automated multi-robot system that processes, fuses, and crops saved pointclouds and can be used for virtual 3D reconstruction in industries such as packaging and shipping.

Hardware:

This project required the following main components:

* Turtlebot3

* Sawyer

* RealSense Camera

Networking required the two robots and the computer to all communicate on the same ROS_MASTER_URI via a router. This synchronized the time on each component which allowed them to work together.

Software:

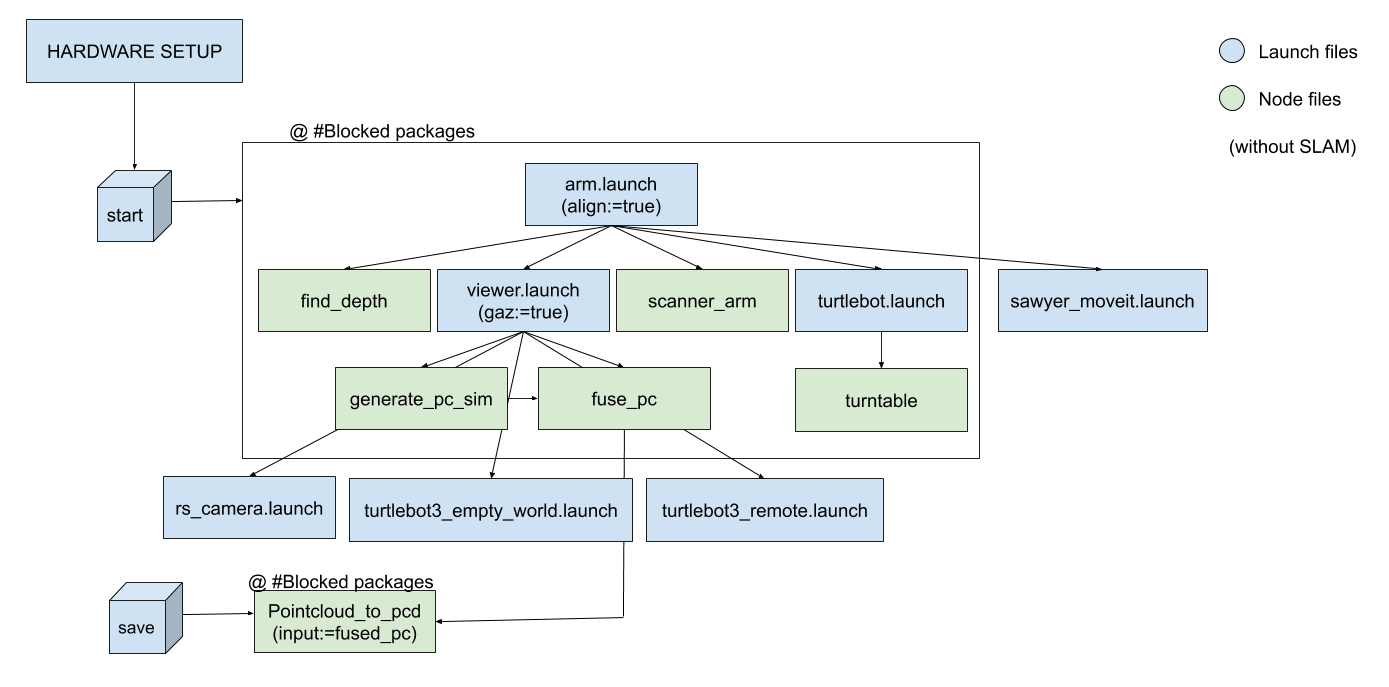

The code utilizes ROS in Python and C++, and it can be split into 3 packages: arm_motion, camera_motion, and camera_reconstruct.

*arm_motion:controls the motion of the arm and the Turtlebot3 to properly position the camera for scanning

*camera_motion:processes, aligns, and stabilizes images to be published to the camera_reconstruct nodes

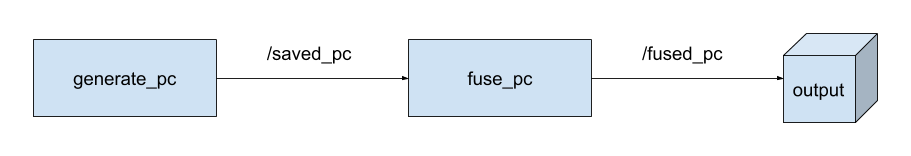

camera_reconstruct:fuses the received pointclouds and saves the final product as a pcd file

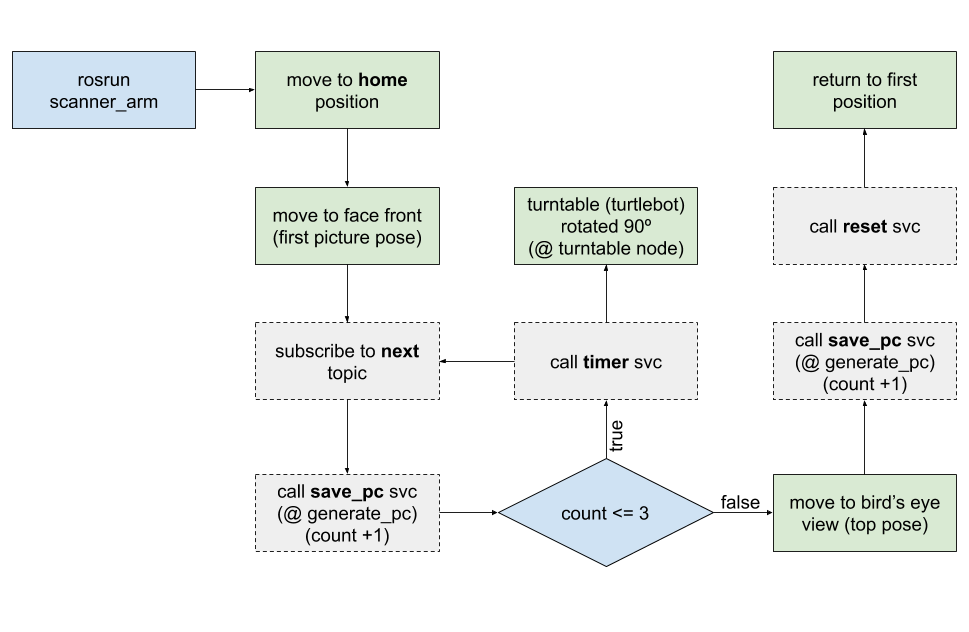

As seen in the diagram, the code can be run by launching the arm.launch file, which includes other necessary files across the three packages.

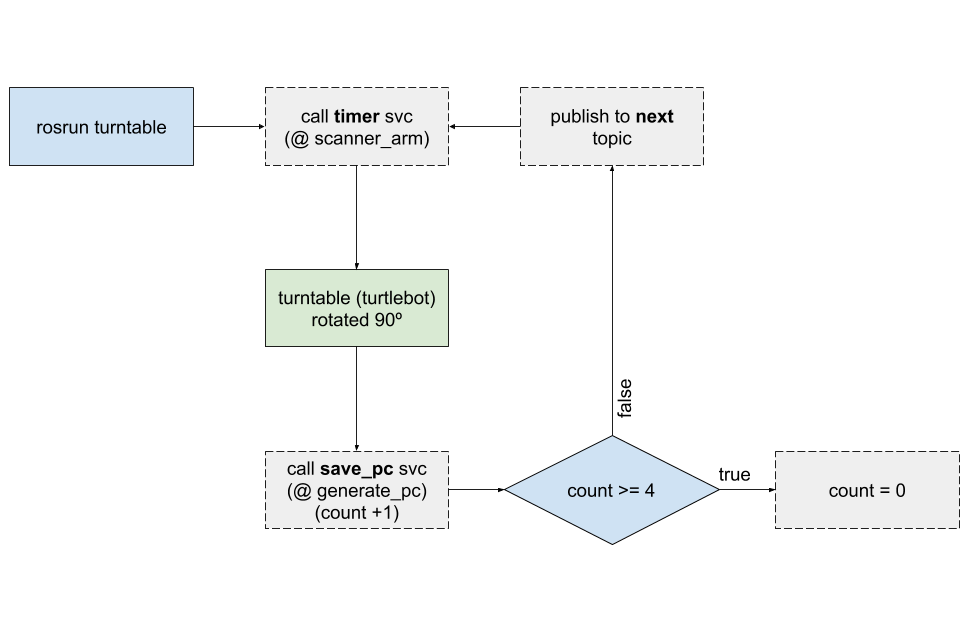

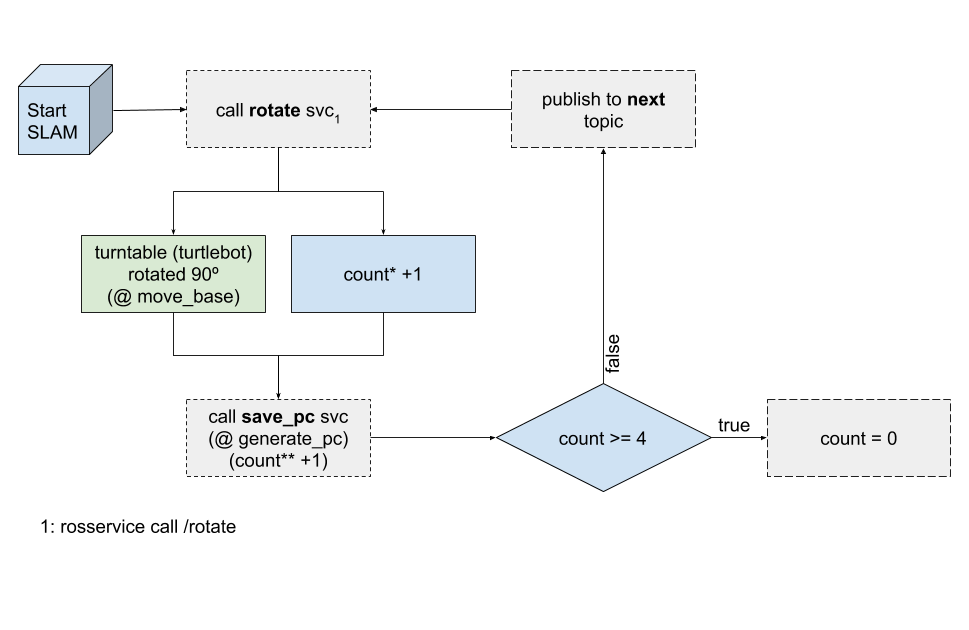

The arm_motion directory contains the turntable and scanner_arm nodes, which control the turtlebot and sawyer arm, respectively. The default positions allow for a total of 5 individual pointclouds to be captured: the front, back, sides, and top of the object.

This is done by rotating the object on the center of the turtlebot a specified number of times per call while the camera face is perpendicular to the desired face and approximately 50 or so cm away. After the turtle rotates a third time, the sawyer arm moves the camera so that it is facing the top of the object for the final individual pointcloud.

The camera_motion directory aligns the center of the depth camera with the scanning object by publishing the depth value of the center pixel via find_depth node. It also stabilizes the depth values with the camera_motion python library to ensure the quality of the pointclouds are up to par.

Finally, the previously mentioned nodes call nodes in the camera_reconstruct directory, generate_pc and fuse_pc. These two nodes receive the continuous stream of pointclouds from the realsense camera and combine each view one-by-one as the pointclouds are transformed to move with the turtlebot, producing a single pointcloud data (or pcd) file per run. This way, the user can choose the views that they want saved (by calling the save_pc service) and will not have to filter through a folder full of pointclouds.

arm_motion/test/turntable.test and camera_motion/test/smoothing_test.py

Although the results of the above method resulted in a multi-faced pointcloud, the images did not line up as intended. Since the issue was in the turtlebot's movement, this issue was addressed with SLAM.

In the top image below of the windex bottle, one can see the resulting pointcloud without SLAM. On the bottom is the pointcloud produced with SLAM of a clorox container. From these two images, it is clear that the addition of SLAM to the operation benefitted the overall accuracy of the model.

Future work:

Although our team was able to successfully produce a multi-faced pointcloud of an acceptable level of accuracy, the default number of faces was limited to five for simplicity. Further development could see pointclouds being saved ~continuously over time and use a iterative closest point algorithm to consolidate the collection, as seen in an example below.